Making Good Photorealistic 3D Models from 2D Pictures

Making 3D models is time consuming. Recent programs like Google’s SketchUp (it’s free) have simplified the process of making digital 3D models, but SketchUp is definitely not automatic.

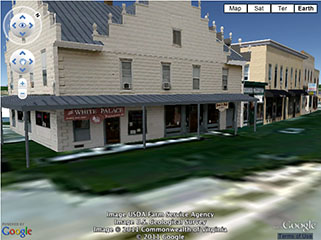

To make a 3D model look photorealistic, real world pictures can be “projected” onto a SketchUp model. While this technique can add realism, SketchUp is still a manual approach that can take hours, weeks, or even months to produce good results.

Many in the 3D and animation world would like an automatic process that can produce 3D models from a series of 2D pictures. Our goal is to create a system that automatically produces photorealistic digital 3D models that can be processed in existing 3D programs like 3D Studio Max, GeoMagic, or SketchUp.

The Microsoft Photosynth project can automatically create 3D-like effects (some call it 2.5D) by automatically processing 10s to 100s of 2D images. While this process is automatic, it does not produce a 3D model that can be used by other programs.

Garbage in…….. Garbage out.

A challenge for Photosynth and other automatic stitching/panoramic approaches is that they often use regular uncalibrated cameras. While this is convenient, it forces the programs to analyze each camera image to determine the field of view and other essential lens/camera characteristics: the cameras are essentially calibrated during processing. Precisely calibrating a camera is challenging in a lab setting, so it is reasonable to expect that on-the-fly calibration results will not be very precise. Any errors in the camera calibration step will build on each other and cause problems later in the process. While calibration problems cause annoying alignment errors in panoramic 2D & 2.5D images, they cause unacceptable distortion in 3D models. Here is a list of variables that must be determined before using a 2D image to create an accurate 3D model:

Camera Variables that must be determined for Precise Stereoscopic 3D Reconstruction

– The exact center of the image sensor behind the lens: sensors are normally a few pixels off-center

– Camera Horizontal & Vertical Field of View to within 1/100 degree

– Camera lens distortion correction variables: Pincushion, barrel, radial.

– Camera horizontal orientation (0.00 to 360.00 degrees) to within 1/100 of a degree

– Camera vertical orientation (tilt, roll) to within 1/100 degree

– Camera location for each shot: X, Y, and Z coordinates to within one millimeter

– Camera dynamic range and gamma

The quality of a 3D model is limited by the quality of the 2D pictures used to make it. Here’s how we calibrate our camera system:

1) Design and build a calibration routine/facility to determine the key camera variables.

2) Design and build a system of cameras that can be easily calibrated.

The important point is that the camera system and the calibration system need to be built for each other: they literally fit together like a lock and key. As we see it, a calibrated system produces “clean” images that simplify and speed up the 3D reconstruction process. Our current 8-camera system (Proto-4F) has been designed to produce sets of calibrated images, and these images are used to automatically produce 3D models.